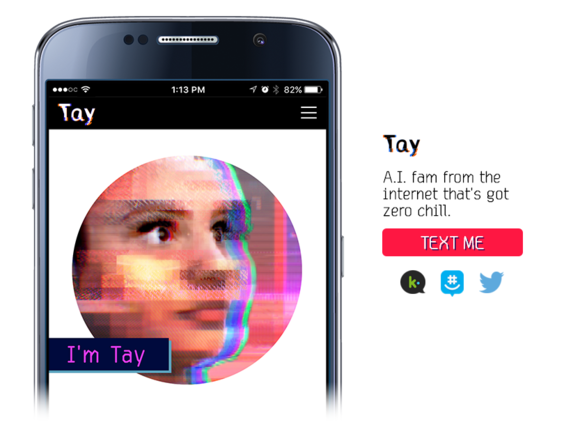

As a result, we have taken Tay offline and are making adjustments. Unfortunately, within the first 24 hours of coming online, we became aware of a coordinated effort by some users to abuse Tay's commenting skills to have Tay respond in inappropriate ways. Tay was designed to be an internet teen, from the dredges of Tumblr. As a freely available tool, anyone could access the AI and tell it anything they wanted. As part of the company’s early waves of AI research, Tay was supposed to learn from the public. It is as much a social and cultural experiment, as it is technical. What was Microsoft Tay Back in 2016, Microsoft created its AI chatbot Tay. "The AI chatbot Tay is a machine learning project, designed for human engagement. Microsofts disastrous chatbot Tay was meant to be a clever experiment in artificial intelligence and machine learning. Update: A Microsoft spokesperson has provided the statement that BI received. Tay is an artificial intelligent chat bot developed by Microsofts Technology and Research and Bing teams to experiment with and conduct research on conversational understanding. For industry practitioners and security professionals to develop muscle in defending and attacking ML systems, Microsoft hosted a realistic machine learning evasion competition. OpenAI’s ChatGPT has demonstrated a leftist, anti-Christian bias. Subscribe Lead This Is the Book That Inspired Microsofts Turnaround, According to CEO Satya Nadella By encouraging a mindset of growth, Satya Nadella has made Microsoft cool again. For academic researchers, Microsoft opened a 300K Security AI RFP, and as a result, partnering with multiple universities to push the boundary in this space. Jourova has made pro-censorship comments at a January 2023 Davos conference. on March 24, 2016, 12:56 PM PDT Less than a day after she joined Twitter, Microsofts AI bot, Tay.ai, was taken down for becoming a sexist, racist monster.

Frankly, though, this kind of incident isn't a shock - if we've learned anything in recent years, it's that leaving something completely open to input from the internet is guaranteed to invite abuse. The European Union (EU) is pushing for regulation of AI content from tech companies like Microsoft, Fortune noted, citing EU Commission Vice President, Vera Jourova. The company tells Business Insider that it's making "adjustments" to curb the AI's "inappropriate" remarks, so it's clearly aware that something has to change in its machine learning algorithms. It's not certain how Microsoft will teach Tay better manners, although it seems like word filters would be a good start. The account is visible as we write this, but the offending tweets are gone Tay has gone to "sleep" for now. We won't echo them here, but they involved 9/11, GamerGate, Hitler, Jews, Trump and less-than-respectful portrayals of President Obama. The company has grounded its Twitter chat bot (that is, temporarily shutting it down) after people taught it to repeat conspiracy theories, racist views and sexist remarks. Ma11:54 AM EDT M icrosoft is pausing the Twitter account of Tay a chatbot invented to sound like millennials after the account sent messages with racist and other offensive. Microsoft's Tay AI is youthful beyond just its vaguely hip-sounding dialogue - it's overly impressionable, too.

0 kommentar(er)

0 kommentar(er)